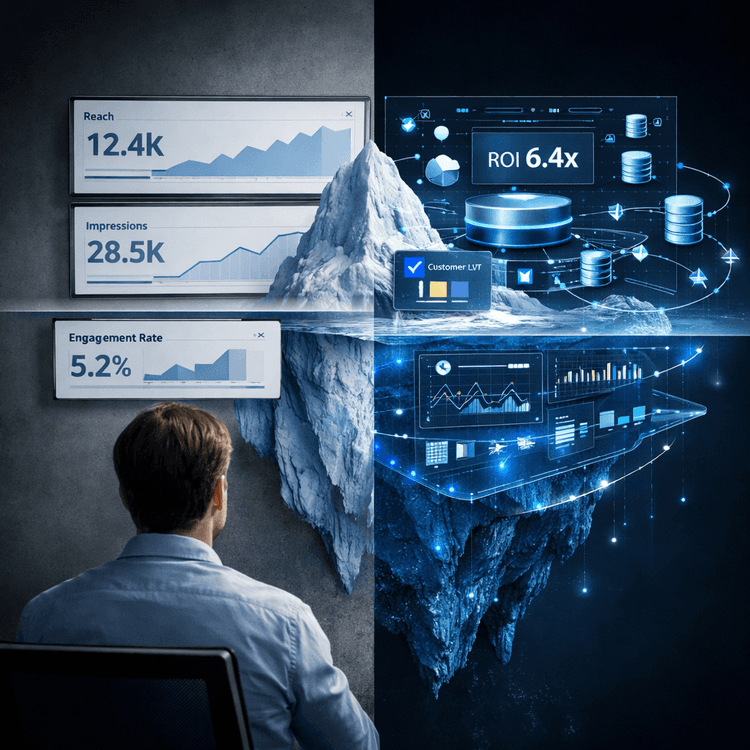

Native analytics on platforms like Meta and LinkedIn are useful. They are well designed. They provide immediate visibility into how content performs inside their ecosystems.

But they are built for in-platform optimization, not executive decision-making.

There is a structural difference between optimizing posts and steering a marketing organization. The first is tactical. The second is strategic. The gap between those two realities is where many teams feel friction, uncertainty, and slow iteration.

This is the analytics gap.

Reach and impressions tell you distribution. They answer a simple question:

Did the content get delivered?

They do not answer:

Did it create business value?

Did it influence pipeline?

Did it attract qualified candidates?

Did it reduce support tickets?

Distribution metrics are necessary. Without delivery, nothing else happens. But they are not sufficient for decision-making at a business level.

A post can generate high reach and still fail commercially. A lower-reach post can generate fewer impressions but drive more meaningful intent. Native dashboards rarely connect these layers clearly.

Serious marketing requires more than visibility into exposure. It requires visibility into impact.

Engagement rate is often treated as the north star metric. It appears clean, comparable, and easy to report.

But it blends fundamentally different behaviors into one percentage.

A like and a save are not the same

A short comment and a qualified buying question are not the same

A passive reaction and an inbound direct message are not the same

When all of these interactions collapse into a single engagement number, nuance disappears.

This is not a flaw in the platforms. It is a reflection of their design. Native dashboards are built to help creators optimize content inside the platform environment. They are not built to reflect complex commercial intent.

If reporting stops at engagement rate, decision-making remains surface level.

The analytics gap becomes most visible when marketing moves beyond isolated posting and starts operating at scale.

Common friction points include:

Siloed dashboards per platform, making it difficult to compare performance cleanly between Meta and LinkedIn

Limited cross-channel attribution, preventing teams from evaluating themes and formats end-to-end

Fragmented exports and shifting metric definitions, breaking historical consistency

No direct way to calculate business-aligned KPIs such as lead generation, recruitment quality, or support deflection

When reporting lives inside separate dashboards, performance conversations become fragmented. Teams debate which platform “worked better” without a shared measurement framework. Executive stakeholders receive reports that are descriptive rather than directional.

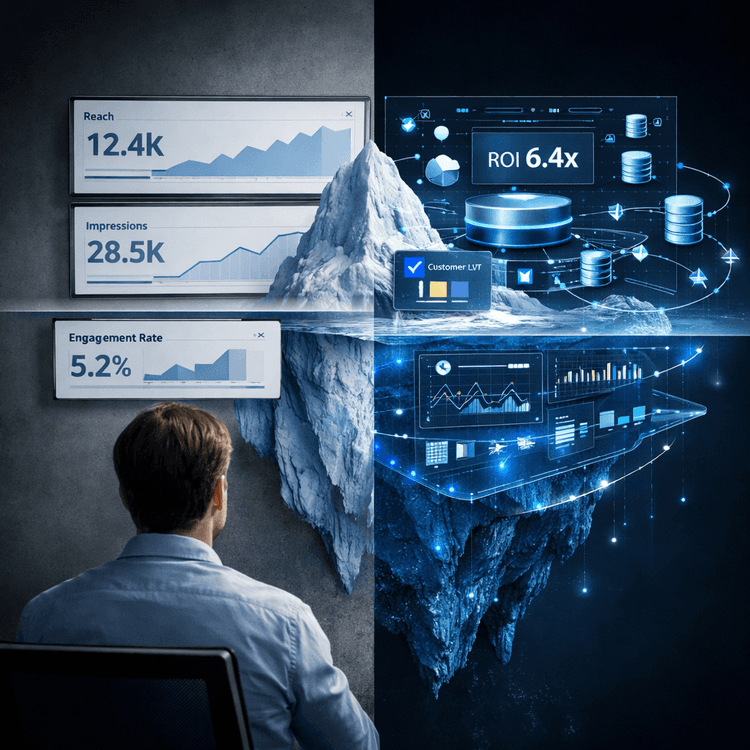

This is where maturity becomes visible. Mature marketing organizations do not rely exclusively on platform-native views. They build owned analytical layers.

The structural shift happens when analytics move from platform dashboards into owned infrastructure.

In practice, this means:

Data is pulled via API

It is stored in a central database

It is normalized across platforms

Definitions are controlled internally

Historical logic remains stable

Instead of accepting whatever metric composition a dashboard provides, teams can calculate their own.

This creates three fundamental improvements:

Control over metric definitions

Consistency over time

Trust in reported numbers

When definitions are owned, reporting becomes repeatable. When data is centralized, comparisons become meaningful. When calculations are auditable, leadership confidence increases.

The conversation shifts from “What does this dashboard show?” to “What does our data model say?”

Once analytics move into a structured database environment, the range of computable metrics expands significantly.

Examples of decision-grade metrics include:

Weighted engagement score that values saves, shares, qualified comments, and inbound messages more heavily than passive reactions

Content decay rate showing how quickly a post loses traction and when recycling or republishing makes sense

Cross-platform resonance index identifying themes that perform consistently regardless of channel

Time-to-approval impact measuring how delays in publishing reduce performance and limit momentum

These are not vanity metrics. They are structural indicators of performance efficiency.

For example, measuring content decay rate can influence posting cadence strategy. Measuring time-to-approval impact can justify operational redesign. Measuring cross-platform resonance can inform campaign theme prioritization.

When analytics reach this level, they influence how marketing operates, not just how it reports.

The most underestimated impact of unified analytics is speed.

When performance data is fragmented, iteration slows down. Teams debate interpretations. Stakeholders question definitions. Reports require manual consolidation. Confidence drops.

Unified analytics reduces friction.

Fewer debates about data accuracy

Faster identification of winning formats

Quicker elimination of underperforming themes

Clear visibility into cross-channel performance

When analytics are paired with workflow and approval systems inside one environment, improvement compounds. Insights feed directly into execution. Execution feeds back into measurement. The cycle tightens.

Speed increases not because teams work harder, but because decision latency decreases.

Over time, this compounds into competitive advantage.

Relying exclusively on native analytics is not wrong. It is simply incomplete for organizations aiming at scale.

The shift toward decision-grade analytics reflects a broader evolution:

From posting to operating

From reporting to steering

From visibility to impact

Marketing maturity is not defined by the number of dashboards reviewed each week. It is defined by the quality of decisions those dashboards enable.

When analytics are unified, normalized, and aligned with business goals, marketing becomes measurable in the language executives understand.

If you want to move from dashboard reporting to decision-grade analytics, register at www.abev.ai and try the trial version.